- How to install apache spark in osx how to#

- How to install apache spark in osx mac os x#

- How to install apache spark in osx mac os#

Furthermore, Apache and PHP are included by default.

How to install apache spark in osx mac os#

So all of these technologies install easily on Mac OS X.

How to install apache spark in osx mac os x#

It is important to remember Mac OS X runs atop UNIX. Personally, the choice to do it myself has proven invaluable. But they forego the learning experience and, as most developers report, eventually break. These packages help get you started quickly.

I am aware of the several packages available, notably MAMP.

How to install apache spark in osx how to#

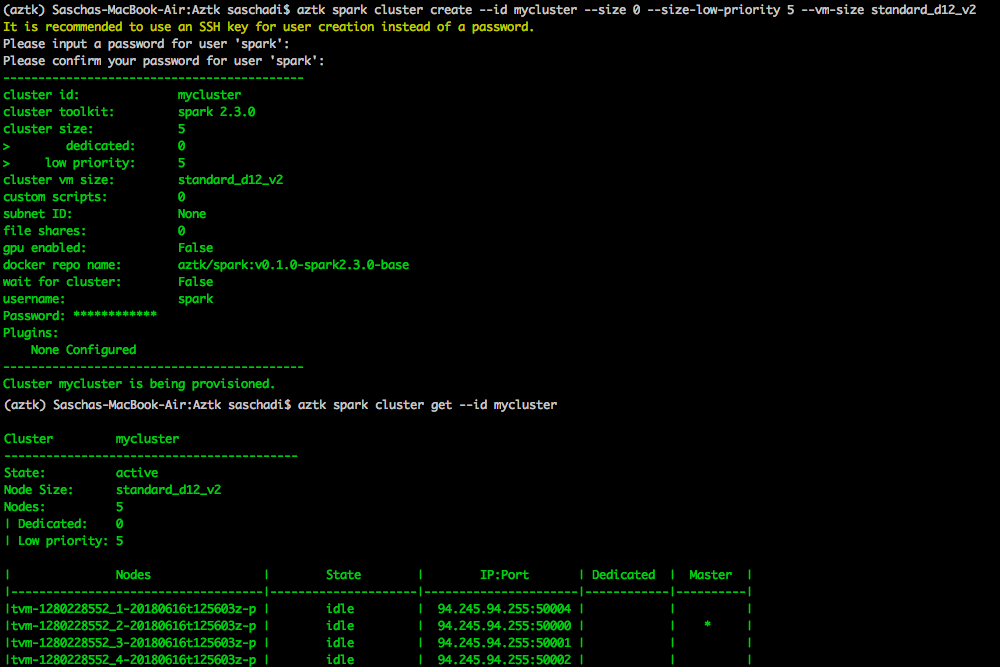

This post serves as much for my own record as to outline how to install Apache, MySQL, and PHP for a local development environment on Mac OS X Mountain Lion Mavericks. Each version of Mac OS X having some minor difference. I have installed Apache, PHP, and MySQL on Mac OS X since Leopard. PS: brew install apache-spark, installs 2.3.MacOS Update: While these instructions still work, there are new posts for recent versions of macOS, the latest being Install Apache, PHP, and MySQL on macOS Mojave. Raise Exception(“Java gateway process exited before sending its port number”)Įxception: Java gateway process exited before sending its port numberĭo you by any chance happen to see this error ?

SparkContext._gateway = gateway or launch_gateway(conf)įile “/usr/local/Cellar/apache-spark/2.3.1/libexec/python/pyspark/java_gateway.py”, line 93, in launch_gateway SparkContext._ensure_initialized(self, gateway=gateway, conf=conf)įile “/usr/local/Cellar/apache-spark/2.3.1/libexec/python/pyspark/context.py”, line 292, in _ensure_initialized config(‘spark.jars’, spark_jars_props) \įile “/usr/local/Cellar/apache-spark/2.3.1/libexec/python/pyspark/sql/session.py”, line 173, in getOrCreateįile “/usr/local/Cellar/apache-spark/2.3.1/libexec/python/pyspark/context.py”, line 343, in getOrCreateįile “/usr/local/Cellar/apache-spark/2.3.1/libexec/python/pyspark/context.py”, line 115, in _init_ All it does is establish a socket connection to one or multiple Ignite nodes and perform all operations through those nodes. It does not participate in the cluster, never holds any data, or performs computations. Test_class = test_test_class(module.test_schema)įile “/Users/adwive1/IdeaProjects/data-catalyst-poc/application/tests/test_schema/generator.py”, line 25, in generate_test_classĪdd_data_attr(test_class_attr, data_file_map, data_schema)įile “/Users/adwive1/IdeaProjects/data-catalyst-poc/application/tests/test_schema/generator.py”, line 55, in add_data_attrĪdd_data_attr_item(test_class_attr, input_df_name, input_file_path, schema=schema)įile “/Users/adwive1/IdeaProjects/data-catalyst-poc/application/tests/test_schema/generator.py”, line 66, in add_data_attr_itemįile “/Users/adwive1/IdeaProjects/data-catalyst-poc/application/tests/helper_test.py”, line 106, in load_csv_infer_schemaįile “/Users/adwive1/IdeaProjects/data-catalyst-poc/application/tests/helper_test.py”, line 75, in start_spark_session Thin Client is a lightweight Ignite connection mode.

:: USE VERBOSE OR DEBUG MESSAGE LEVEL FOR MORE DETAILSĮxception in thread “main” : Īt .SparkSubmitUtils$.resolveMavenCoordinates(SparkSubmit.scala:1303)Īt .DependencyUtils$.resolveMavenDependencies(DependencyUtils.scala:53)Īt .SparkSubmit$.doPrepareSubmitEnvironment(SparkSubmit.scala:364)Īt .SparkSubmit$.prepareSubmitEnvironment(SparkSubmit.scala:250)Īt .SparkSubmit$.submit(SparkSubmit.scala:171)Īt .SparkSubmit$.main(SparkSubmit.scala:137)Īt .SparkSubmit.main(SparkSubmit.scala)įile “run_all_unit_tests.py”, line 28, in :: #commons-compress 1.4.1!commons-compress.jar :: ^ see resolution messages for details ^ :: #commons-compress 1.4.1!commons-compress.jar (2ms)įile:/Users/adwive1/.m2/repository/org/apache/commons/commons-compress/1.4.1/commons-compress-1.4.1.jar I googled for a whole day but could not figure out what’s going on. I followed the same stepsīut I keep getting the following error. I got a new mac and I am trying to set up pyspark project.

0 kommentar(er)

0 kommentar(er)